A couple posts ago, I described the machine learning model that we developed to predict the extent of cropland irrigation worldwide. This post is a collection of all the things I learned about Google Earth Engine that went into running a global-scale model successfully.

[Read More]The importance of selection effect

It was the first class of a data science course in the MIDS program. Our instructor started off with a question: “Do you think this academic program gives you a better career?” We gave a bunch of answers, quite the ignorant folks that we were. Of course, is this even a question at this point?! Yes, here are all the new things we’re learning. Yes, the instructors are top-notch. Yes, look at the syllabus and the projects, yada yada … nothing surprising there.

The instructor asked, “Yes, but how do you know that the program is making you better? What if you joined the program because you were motivated enough to apply and work through it, and therefore you’d get better in your career anyway?”

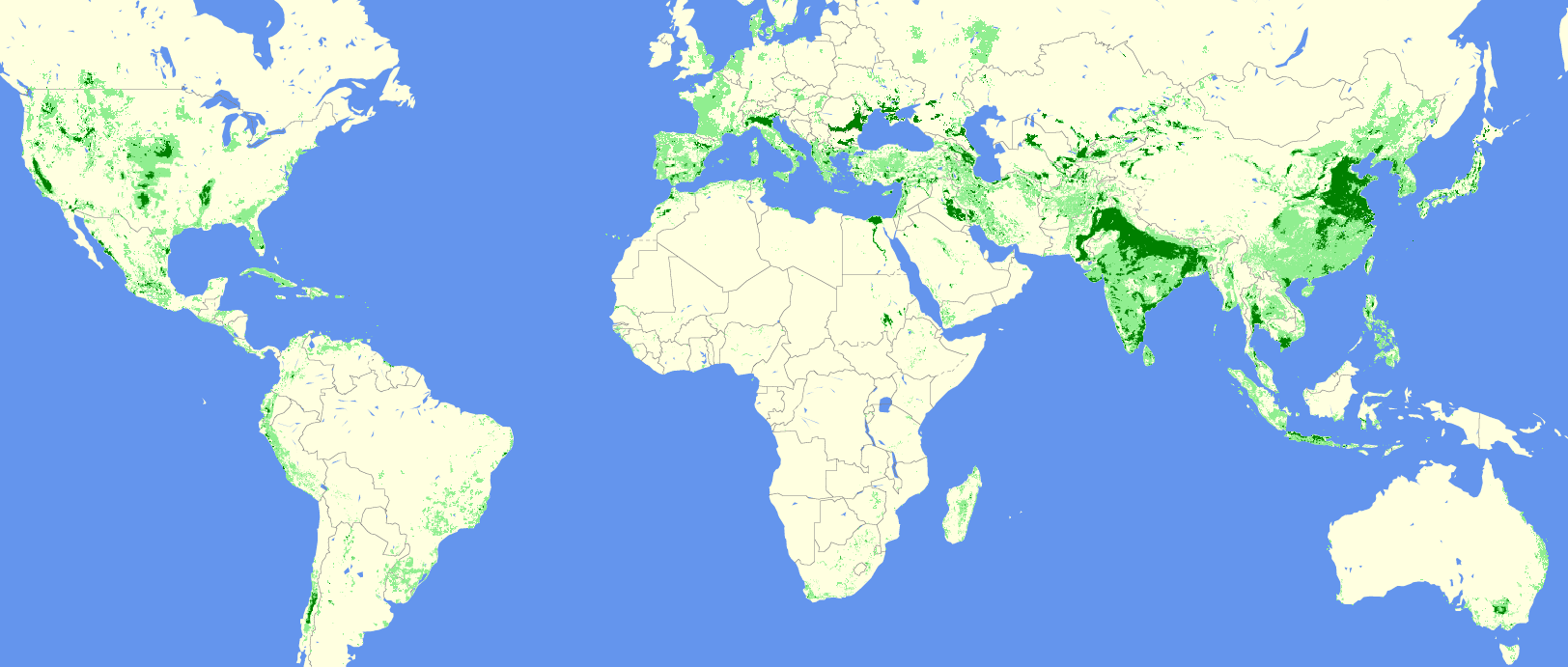

[Read More]Global Irrigation Map, our capstone machine learning project

For our capstone project at UC Berkeley, we worked with the Department of Environmental Studies. We developed a machine learning model that we continued to improve even after the course term, and I’m happy to report that it is now under discussion to be a published paper – a first for the program itself, according to our lecturer.

Update Aug/5/2020: New blog post: a behind the scenes look on how we built the model.

[Read More]Lazy evaluation in real life

There are so many great ideas in engineering we can take home and apply to our own lives. Today I will talk about one of them: lazy evaluation.

[Read More]How are you saving your knowledge?

We live in a “knowledge economy”. Every day brings with it new information. Do you have an external system to store your knowledge efficiently and effectively?

[Read More]Notes on Spark Streaming app development

This post contains various notes from the second half of this year. It was a lot of learning trying to get a streaming model working and ready in production. We used Spark Structured Streaming, and wrote the code in Scala. Our model was stateful. Our source and sink were both Kafka.

[Read More]Privacy in today's age with a SOCKS proxy

Say you are at a cafe, and you want to surf the Web. But the WiFi is not secure. Or say your company lets you bring your laptop, but what if its firewall has blocked your favorite website? Is there no hope, besides paying $15 to a VPN provider?

There is, and it costs about $3.50 per month as of this writing.

[Read More]The Spark tunable that gave me 8X speedup

There are many configuration tunables in Spark. However, if you have time for only one, set this one. It made a streaming application we run process data 8X faster. That’s 800% improvement, no code change needed!

[Read More]Getting top-N elements in Spark

The documentation for pyspark top() function has this warning:

This method should only be used if the resulting array is expected to be small, as all the data is loaded into the driver’s memory.

This piqued my interest: why would you need to bring all the data to the driver, if all you need is a few top elements?

The answer is: it does not load all the data into the driver’s memory.

[Read More]Livy is out of memory

Spark jobs were failing. All of them. The data pipeline had stopped. This is a tale of high-pressure debugging.

[Read More]