Sorry about that jargon soup, but this post is technical and is intended for such audiences.

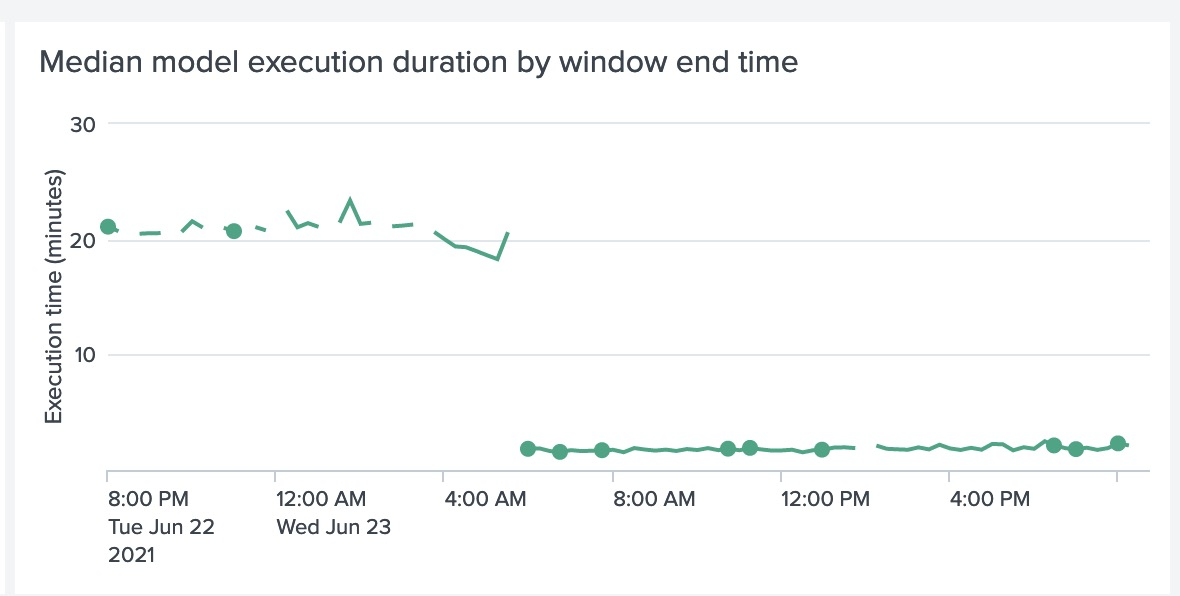

I recently had an opportunity to speed things up by 10X:

Two Kinds of I/O

Programs can become slow if they spend all their time waiting for messages to be delivered or received. Such I/O (“Input/Output”) is called “blocking” or “synchronous” I/O. In contrast, in “asynchronous” I/O, the program lets the operating system know about an I/O operation and continues doing other things; the OS will post an event to the app when I/O is done (or if it failed) and the app continues from where it asked for that operation.

Python got a new framework for asynchronous I/O, async-await, starting in version 3.5. However, you cannot lay it on top of blocking I/O calls. i.e., if you call a function that is blocking, then the calling process still goes to sleep, so your efforts on asynchronous I/O are wasted.

What to do? You can replace your blocking function with an AIO-ready function, but sometimes that is not an option. In that case, you can create (A) a pool of worker threads, and (B) a queue of messages to be sent, and have your worker threads pick up messages from the queue. The nice thing is that even if a thread goes to sleep, there are other threads probably available to continue to make progress.

Your Futures are Concurrent

Since version 3.2, the concurrent.futures library in Python provides easy access to this pattern of task queues and worker pools. You don’t need async-await to use this library.

Here is an example run. I set up 4 worker threads, and gave them 50 messages to send. To simulate slowness, I made each send() take 1 second. I also randomly failed some sends, to see whether they are made visible to the caller.

You can see that 4 messages were sent immediately by the pool of 4 workers, and no workers were left. However, all the remaining messages were still in the queue, and they were picked up as and when previous sends finished:

# we can send 4 messages right away, because we have 4 workers

2021-06-17 15:42:37,673 sending msgs: starting

2021-06-17 15:42:37,675 sending msg: 0

2021-06-17 15:42:37,675 call to send msg 1 / 50

2021-06-17 15:42:37,675 sending msg: 1

2021-06-17 15:42:37,675 call to send msg 2 / 50

2021-06-17 15:42:37,675 sending msg: 2

2021-06-17 15:42:37,675 call to send msg 3 / 50

2021-06-17 15:42:37,676 sending msg: 3

2021-06-17 15:42:37,676 call to send msg 4 / 50

2021-06-17 15:42:37,676 call to send msg 5 / 50

2021-06-17 15:42:37,676 call to send msg 6 / 50

2021-06-17 15:42:37,676 call to send msg 7 / 50

2021-06-17 15:42:37,676 call to send msg 8 / 50

2021-06-17 15:42:37,676 call to send msg 9 / 50

2021-06-17 15:42:37,676 call to send msg 10 / 50

# ... and so on, we are queuing all the remaining messages ...

2021-06-17 15:42:37,678 call to send msg 49 / 50

2021-06-17 15:42:37,678 call to send msg 50 / 50

2021-06-17 15:42:38,677 sending msg: 4

2021-06-17 15:42:38,677 sending msg: 5

2021-06-17 15:42:38,678 sending msg: 6

2021-06-17 15:42:38,678 sending msg failed for msg 3: send failed on msg: 3

2021-06-17 15:42:38,678 sending msg: 7

2021-06-17 15:42:39,681 sending msg: 8

2021-06-17 15:42:39,681 sending msg: 9

2021-06-17 15:42:39,681 sending msg: 10

2021-06-17 15:42:39,681 sending msg: 11

2021-06-17 15:42:39,681 sending msg failed for msg 7: send failed on msg: 7

2021-06-17 15:42:40,686 sending msg: 12

2021-06-17 15:42:40,686 sending msg: 13

# ... and so on, we are sending 4 messages every second ...

2021-06-17 15:42:49,715 sending msg: 48

2021-06-17 15:42:49,715 sending msg: 49

2021-06-17 15:42:50,719 sending msgs: finished

As an aside, the concurrent.futures library also allows you to create process-pools. Whether to use processes or threads depends on whether your tasks are computational (“CPU-bound”) or chatty (“I/O-bound”). Processes are traditionally heavyweight, although operating systems these days blur the boundary between processes and threads quite a bit. Linux, for example, uses the same underlying system call, clone(), to create either.

A Wait Unnecessary

The async-await framework also allows you to use a thread-pool as above. The glue is a function called run_in_executor(), but I think it is unnecessary.

In general, I prefer the simplest solution possible. If a thread-pool library can solve the problem cleanly and completely, there is no need to introduce an async-await shim on top of it. Adding components mindlessly exposes us to risk that can happen due to the interaction of those components, and increases cognitive load on the maintainer.

Show Me the Source

Source code for both sample programs, including full outputs, are available:

Where to Go Next

There are certainly other ways to solve concurrency problems. Threads are often the simplest solution, but any shared state between them can cause nasty, unpredictable bugs. Asynchronous I/O is not a panacea either, because it breaks code flow and readability by yielding control wherever the solution requires I/O.

It’s useful to familiarize with other approaches to concurrency, and to this effect, I recommend Seven Concurrency Models in Seven Weeks by Paul Butcher (2014).